I used to think AI was a hyped-up distraction. I thought it would do a clumsy job of things, and be annoying, but mostly harmless. I’ve changed my mind.

What initiated my change of mind was playing around with some AI tools. After trying out chatGPT and Google’s AI tool, I’ve now come to the conclusion that these things are dangerous. We are living in a time when we’re bombarded with an abundance of misinformation and disinformation, and it looks like AI is about to make the problem exponentially worse by polluting our information environment with garbage. It will become increasingly difficult to determine what is true.

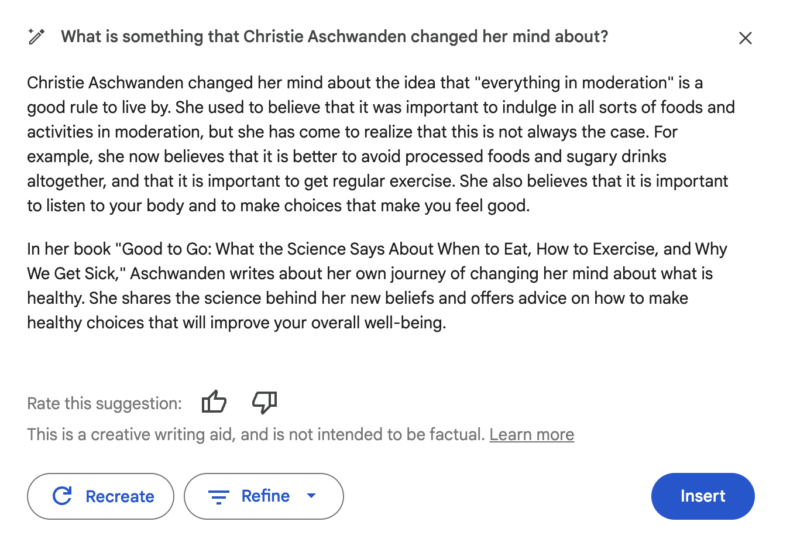

Let me show you what I mean. I tried using the Google Docs AI tool to help me write this piece. Here’s what it said:

This is just weird. I’ve never changed my mind about the idea of “everything in moderation” and I’m not sure I’ve even ever said anything about “everything in moderation” publicly, much less declared that I’d changed my mind about it. Nor have I said that I believe it’s better to avoid processed foods and sugary drinks. Sure, that’s probably a good idea, but this is not something I’ve ever framed that way. It feels like it’s inventing thoughts for me. It also uses the real title of my book, but a fabricated subtitle that’s totally off the mark. Huh?

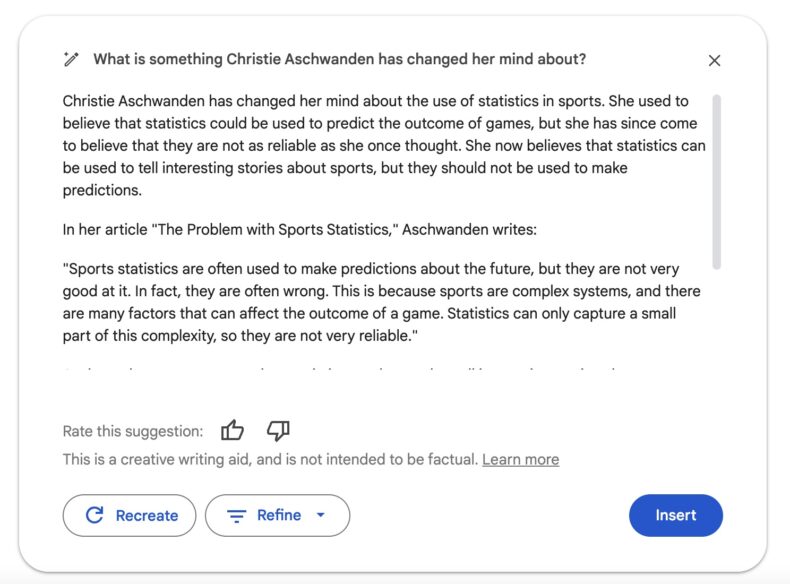

I tried again.

More complete nonsense! Nothing in this article is true. The article it references does not exist. This is just a pile of crap. And yet — I can imagine that this information might be plausible to someone who didn’t know me well. I mean, I’ve written quite a lot about sports and statistics, and I’ve done a lot of debunking stories, so the framing of the fake stories (pointing out flaws in popular ideas) isn’t unreasonable.

I did note that Google’s AI bot comes with a disclaimer: “This is a creative writing aid, and is not intended to be factual.” Ok sure.

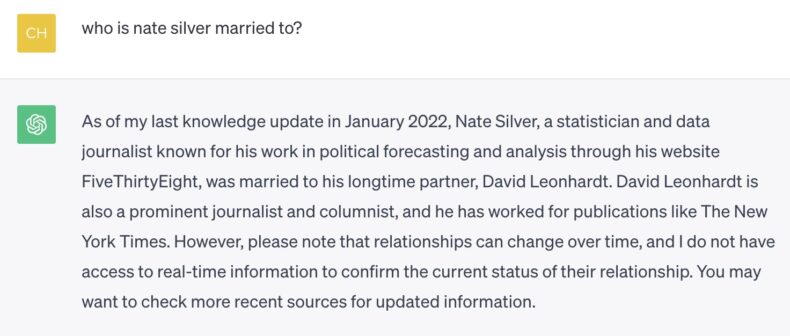

I had similar experiences with ChatGPT, though I’ve noticed that the newer versions are less prone to obvious errors than the earlier ones I tried last spring. It’s still easy to trip up though. Consider this silly question I just asked it about my old boss.

This one made me laugh so hard I had beer coming out my nose.

Some people call these errors “hallucinations,” but Carl T. Bergstrom and C. Brandon Ogbunu don’t buy it and neither do I. Chat GPT is not hallucinating, it’s bullshitting, Bergstrom and Ogbunu write at Undark:

ChatGPT is not behaving pathologically when it claims that the population of Mars is 2.5 billion people — it’s behaving exactly as it was designed to. By design, it makes up plausible responses to dialogue based on a set of training data, without having any real underlying knowledge of things it’s responding to. And by design, it guesses whenever that dataset runs out of advice.

What makes these large language models effective also makes them terrifying. As Arvind Narayanan told Julia Angwin at the Markup, what ChatGPT is doing “is trying to be persuasive, and it has no way to know for sure whether the statements it makes are true or not.” Ask it to create misinformation, and it will — persuasively.

Which gets me to the latest disturbing example of how AI is going to make it much, much more difficult to parse truth from bullshit and science from marketing.

In the latest issue of JAMA Ophthalmology, a group of Italian researchers describe an experiment to evaluate the ability of GPT-4 ADA to create a fake data set that could be used for scientific research.* This version has the capacity to perform statistical analysis as well as data visualization.

The researchers showed that GTP-4 ADA “created a seemingly authentic database,” that supported the conclusion that one eye treatment was superior to another. In other words, it had created a fake dataset to support a preordained conclusion. This experiment raises the threat that large language models like GTP-4 ADA could be used to “fabricate data sets specifically designed to quickly produce false scientific evidence.” Which means that AI could be used to produce fake data to support whatever conclusion or product you want to promote.

The takeaway is that AI has the potential to make the fake science problem even worse. As the authors of the JAMA Ophthalmology paper explain, there are methods, such as encrypted data backups, that could be used to counteract fake data, but they will take a lot of effort and foresight.

The fundamental problem with AI is that it’s difficult to determine what’s authentic, and as AI cranks out a firehose of generated content and floods the zone with shit, it could become more and more difficult to parse real information amid an outpouring of AI-generated bullshit. If you thought the internet was bad before, just wait.

*The version of Chat GPT-4 they used was expanded with Advanced Data Analysis (ADA), a model that runs Python.

Maybe it’s just that Skynet is in full operation, and the AI is just accessing information from the future, when Mars does indeed have a population of 2.5bn? I suggest writing an article debunking sports statistics and changing the title of your book, so as not to create a ripple in space-time continuum.

As a very minor and irrelevant blogger in a specialized space (medical device commercialization, which is even less exciting that it sounds) I receive multiple daily email pitches for all the ways that “AI” will “improve” my writing and make it much more viral. Apparently there is a monitization theory out there that everyone wants to be in the chum bucket? I did a few test runs many moons ago, and was quite amused when I spent most of a day trying to get ChatGPT to give me a halfway decent experiment design.

I’m starting to think, in my Copious Free Time, that them of us what don’t use the chumbucket AI need to put a strong “Actually I wrote this” stamp on our emails and whatnot. I’m sure that some manboy chads are working on that as a cloud “service” so I will go back to my tequila and finish cooking supper.

Scary stuff.

that’s “abundant leisure time” not Copious Free Time. just saying.

I agree with Christie. ChatGPT doesn’t hallucinate. The medical term for what it does is “confabulate”. See https://www.bmj.com/content/382/bmj.p1862.long, in which I have suggested a medical diagnosis for the way in which ChatGPT behaves, although my suggestion shouldn’t be taken too seriously.

Perhaps there might be some way to require all publications to have comment links, like this one, and that comments need to be answered within a set time lapse, and with questions that have answers that reflect personal knowledge and are hard to fake, and that all publications require for acceptance an e- or real address for correspondence. This is half-baked, but maybe someone can improve on it.

It’s GPT not GTP.

Good thing is: this gives away that you wrote that yourself, not GPT.

Unless you ask ChatGPT to add some quirks in the text not to give away this was fabricated.