If you’re like most people, you haven’t heard of Roko’s Basilisk. If you’re like most of the people who have heard of Roko’s Basilisk, there’s a good chance you started to look into it, encountered the phrase “timeless decision theory”, and immediately stopped looking into it.

However, if you did manage to slog through the perils of rational philosophy, you now understand Roko’s Basilisk. Congratulations! Your reward is a lifetime of terrorised agony, enslaved to a being that does not yet exist but that will torture you for all eternity should you deviate even for a moment from doing its bidding. According to internet folklore.

The internet has no shortage of BS and creepy urban legends, but because Roko’s Basilisk involves AI and the future of technology, otherwise-credible people insist that the threat is real – and so dangerous that Eliezer Yudkowsky, the moderator of rationalist forum Less Wrong, fastidiously scrubbed all mention of the term from the site. “The original version of [Roko’s] post caused actual psychological damage to at least some readers,” he wrote. “Please discontinue all further discussion of the banned topic.”

Intrigued? Yeah, me too. So despite the warnings, I set out to try to understand Roko’s Basilisk. By doing so, was I sealing my fate forever? And worse – have I put YOU in mortal danger?

This is a science blog so I’m going to put the spoiler right at the top – Roko’s Basilisk is stupid. Unless the sum of all your fears is to be annoyed by watered-down philosophy and reheated thought experiments, it is not hazardous to keep reading. However, although a terrorising AI is unlikely to reach back from the future to enslave you, there are some surprisingly convincing reasons to fear Roko’s Basilisk.

But let’s start from the beginning. Who is Roko and what’s with the basilisk?

“Roko” was a member of Less Wrong, an online community dedicated to “refining the art of human rationality”. In 2011 he proposed a disturbing idea to the other members: a hypothetical – but inevitable – artificial super-intelligence would come into existence. It would be a benevolent AI, set on minimising the suffering of humanity. Because it would be endowed with this intrinsically utilitarian mindset, it would punish anyone insufficiently supportive of its goals. Those goals include coming into existence in the first place.

That means it would be especially critical of people in its past – anyone who had heard about the possibility of creating a utilitarian super-AI but had not decided then and there to drop everything and repurpose their life to midwifing it into existence. To punish those people, the AI would create a perfect virtual simulation of them based on their data – and then torture them for all eternity (In case you can’t muster sympathy for a future copy of yourself, there are also some noodling offshoots of this thought experiment that posit it will develop time travel and rendition you from your own past for torture).

So that’s why you’re supposed to be so terrified now. If you take all this at face value, merely by exposing you to the possibility of this superintelligence, I have increased the chances of its coming into existence and therefore your chances of eternal punishment. Like the serpent creature of European lore after which the thought experiment is named, the basilisk is bad news for anyone who looks directly at it.

If this is all starting to seem a bit familiar, it’s because this is basically the deal offered by most religions: good news, there’s an omnipotent, benevolent superintelligence! If you don’t believe me and support its agenda, it will torture you for all eternity!

In fact, as many have pointed out, Roko’s Basilisk is remarkably similar to a bit of religious apologia known as Pascal’s Wager.

Pascal’s Wager posits belief in God as a kind of eternal insurance policy: if you believe, and it turns out you were right and God does exist, then you’ve hit the jackpot. You’re fine. If you believe, but you were wrong, and God does not exist, you’re still fine. You’re just dead and you’ll never be the wiser. If you don’t believe, and you were right, and God does not exist, once again you’re fine. But. If you don’t believe, and you were wrong, and God does exist? There’s literally hell to pay.

So is Roko’s Basilisk just Pascal’s Wager for the Soylent set? The kind of rationalist who would propose a future technological superintelligence is not the kind of person who has any patience with religious tropes. Nonetheless, scholars have taken note of the similarities. “This narrative is an example of implicit religion,” says Beth Singler, an anthropologist at the Faraday Institute for Science and Religion. “It’s interesting that this explicitly secular community is adopting religious categories, narratives and tropes.”

The rise of believers in a godlike AI is taking place against the backdrop of a precipitous fall in traditional organised religion, and a rise in the popularity of utilitarian philosophy.

Utilitarianism has it that the most moral decision is always the one that provides “the greatest good for the greatest number.” This kind of scientism is especially attractive for techies. “Its emphasis on numbers can elide the messier aspects of human experience,” says Singler.

Variants of utilitarian ethics are proliferating all around us – the effective altruism movement, for example, tries to take the complications out of ethical decisions with a “by the numbers” approach.

The serious philosophers behind effective altruism grapple with the troubling implications of adopting a strict utilitarian ethic (see for example the trolley problem). But not everyone on the internet is a serious philosopher, and internet philosophy is known to take shortcuts.

Roko’s Basilisk seems to be an uneasy marriage of utilitarian ethics and implicit religion. It might be a sign of what’s to come. There are a few new religions knocking around based on worship of AI, notably the church of the Way of the Future. Singler says they have not quite worked out their tenets yet, but there is a good chance that any religion founded on computing power will draw on utilitarianism.

So is our next god a utilitarian tech bro? Christ, let’s hope not. Because if it is, Roko’s Basilisk wears a fedora and quotes Jordan Peterson.

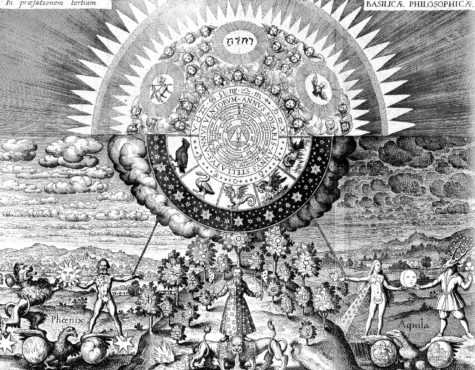

Image credits:

Illuminati looking picture: GuzzoniGiovanni – Own work, CC BY-SA 4.0

Allegory of the strength of faith by Johann Michael Zinck of Neresheim. Wolfgang Sauber – Own work, CC BY-SA 3.0