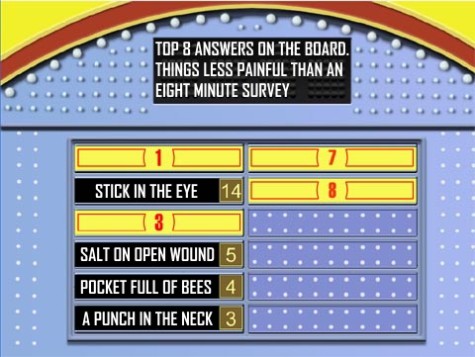

On a scale of 1 to 10, 1 being “not at all” and 10 being “very much”, how much has the sight of this question made you die inside?

You’re not alone. Surveys are dreadful; often badly-worded, usually tedious, always demanding more of your time than they deserve. Yet they’re a pillar on which a lot of soft science rests. Epidemiologists use them to track disease behaviour. Sociologists use them to determine rates of breastfeeding. World governing bodies use them to determine world rankings of countries’ education systems.

A few days ago a scandal broke about falsified survey data in a study of attitudes toward marriage equality. Turns out this is more common than you’d think. So should you trust any survey?

A lot of research into that question has focused on ways that people taking surveys can foul the data. Even unbiased questions can yield bad answers because of psychological traps. Are you doing a survey about racial attitudes? The answers you get will depend drastically on whether the interviewer is white or black. On voting patterns? After an election, 25 per cent of people who didn’t vote will lie and tell you that they voted.

There are ways to game these psychological traps. When you’re asking the would-be liar about his voting history, or example, add a qualifier like “many people do not vote because something unexpectedly arose” — a little salve for the nonvoting ego — and presto, your non-voter tells you the truth. Or you could play bad cop: to suss out accurate racial attitudes, tell the respondent that they are being psychologically monitored for signs of deception. It’s mean, but it works.

But psychological traps aren’t the only problem. Some people just don’t want to be taking your damn survey. They will pee in your data pool by “satisficing” — circling strings of numbers at random and not taking the survey seriously. To defeat satisficing, researchers narrow their population of survey takers to people who have giant nerd boners for the topic they’re being quizzed about.

So have these tricks outwitted all the worse angels of our nature? Hardly. Jörg Blasius, a sociologist at the University of Bonn, doesn’t trust survey data no matter how much you compensate for people’s laziness and self-deception. Unlike the psychologists who scour survey questions for various inadvertent biases, Blasius and his colleague Victor Thiessen study them for evidence of misconduct. What they found shocked them.

A little background: a few years back, Germany was rocked by a phenomenon called “Pisa shock”. This happens when the world education rankings come in and a country’s rankings are surprisingly low. Germany had found itself rubbing elbows with — gasp! — the US. Birthplace of creationism! Kansas! Jenny McCarthy, doctor of vaccines! How could Germany’s vaunted educational system be on par with the home of the hotdog-stuffed pizza crust?

The survey that yields this rankings table — Pisa, or Programme for International Student Assessment — is arguably the gold standard of surveys. It is administered by the Organisation for Economic Cooperation and Development, a global body whose spit-polished research influences a lot of policy.

Sifting through the data, however, Blasius and Thiessen found unlikely clusters of numbers that indicated satisficing. Which didn’t add up. The setup should have been perfect: the survey-takers were school principals, taking a survey about their own schools. Were they motivated to complete the survey? Yes – OECD is a chance to show your school in its best light. Were they intelligent? Sure – they’re school principals. Did they have nerd boners for the topic? They’re school principals. There was no reason these people should have been satisficing.

One possible explanation was that the principals had simply been pressed for time. I don’t care who you are, no one has time to take a survey. Especially when the survey has questions that require math and research, which this one did. Faced with higher-ups breathing down their necks, did the principals do the bare minimum to make this survey go away?

Maybe, but Blasius’ statistical analyses were finding too much repetition even for satisficing.

That was when they realised what they were seeing. It wasn’t the principals taking shortcuts — it was the survey administrators. They had copied the data from one survey and simply hit paste, paste, paste when entering the data into the spreadsheet. Blasius isn’t in the business of offering motives, but he speculates that in some cases it might have been a cover-your-ass maneuver.

Here’s what he means. Let’s say you’re an OECD survey administrator and you misplaced a box of completed surveys that need to go into the master spreadsheet. The deadline is looming. “They were probably under a lot of pressure to get everything in perfectly,” he says. But you can’t ask 200 principals to repeat the survey, so instead you panic and take one survey you deem representative, copy its results 200 times into the statistics software, and cross your fingers that you don’t get caught.

In some ways, it’s the perfect crime, he says:

Inordinate resources would be required to check for duplicates in hundreds or thousands of interviews with hundreds of questions. Therefore, the chance of being detected and risking sanctions for such deviant behavior is rather low, while the rewards for “exemplary performance” might be high.

How prevalent was this fraud? In some countries, employees of the institutes actually duplicated large parts of their data. In the crudest cases, complete duplicates (except for the school identification number) were found. Which is brazen! Not to mention lazy: “A single change in the entire string of numbers,” Blasius says, “and we would have failed to identify these cases as identical.”

The degree of damage to the survey’s credibility varies by country. Worst offenders were Italy, Slovenia, and Dubai/UAE, where the researchers flagged “an alarming number of cases”. Finland, Denmark, Norway and Sweden, on the other hand, had pristine data that didn’t contain a single dodgy repeat. The full name-and-shame is written up in the July 2015 issue of Social Science Research.

Blasius thinks his paper “heavily underestimated” the fabrication. “Since we did not screen for more sophisticated forms of fabrication,” the authors wrote, “the instances we uncovered here represent the lower bound of its prevalence.”

Will there be any consequences? Probably not. “Perhaps a few people will be shuffled around,” he told me, “or a few policies readjusted internally at OECD.”

But the problem is bigger anyway. If the OECD — the gold standard of surveys — is this rotten, how can we possibly trust the lesser ones? Do “24 percent of people around the world” truly consider the United States to be the biggest threat to peace, second only to Pakistan’s 8 per cent? Would almost one third of American adults actually “accept a TSA body cavity search in order to catch a flight“? Are you really a Carrie and not a Miranda?

I just hope this lengthy post about surveys and the people who ensure their accuracy doesn’t go too viral and crash LWON’s servers.

Image credits

Family Feud survey survey: Greg Sherwin, originally at coffeeratings.com

Here’s Johnny: shutterstock

Thrilled survey takers: shutterstock

Any sophisticated survey will have an internal metric for validity. Any single survey result that doesn’t score high enough on the validity scale will be flagged. Often these validity scales are based on groups of similar questions, e.g., “How smart/intelligent/knowledgeable are your students?” In most contexts these answers are highly correlated.

It should also be a requirement to publish the complete dataset, and the analysis, including notations for excluded data. If someone else can’t follow your data and your analysis, it doesn’t count. In the old days lab notebooks were kept for just this reason. These days peer review must be followed by data review.

Data review is an interesting suggestion. I wonder if that would make it even harder for open journals to thrive?