I just wrote a story about robots whose brains are based on the neural networks of real creatures (mostly cats, rats and monkeys). Researchers put these ‘brains’ in an engineered body — sometimes real, sometimes virtual — equipped with sensors for light and sound and touch. Then they let them loose into the world — sometimes real, sometimes virtual — and watch them struggle. Eventually, the robots learn things, like how to recognize objects and navigate to specific places.

I just wrote a story about robots whose brains are based on the neural networks of real creatures (mostly cats, rats and monkeys). Researchers put these ‘brains’ in an engineered body — sometimes real, sometimes virtual — equipped with sensors for light and sound and touch. Then they let them loose into the world — sometimes real, sometimes virtual — and watch them struggle. Eventually, the robots learn things, like how to recognize objects and navigate to specific places.

These so-called ’embodied’ robots are driven not by a top-down control system, but by bottom-up feedback from their environment. This is how humans work, too. If you’re walking on the sidewalk and come across a patch of gravel, your feet and legs feel the change and rapidly adjust so that you don’t topple. You may not even notice it happening. This embodied learning starkly contrasts with most efforts in the artificial intelligence field, which explicitly program machines to behave in prescribed ways. Robots running on conventional AI could complete that sidewalk stroll only by referring to a Walking-On-Sidewalk-with-an-Occasional-Patch-of-Gravel program. And even then, they’d have to know when the gravel was coming.

Most advocates of embodied AI are motivated by its dazzling array of potential applications, from Mars rovers to household helpers for the elderly. But I’m more curious about the philosophical implications: whether, in loaning robots visual, memory, and navigational circuits from real biological systems, the researchers might also be giving them the building blocks of consciousness.

That may sound far-out. And I’ll admit up front that some neuroscientists are extremely skeptical of the idea of a conscious robot. Baroness Susan Greenfield of the University of Oxford (and of Greenfieldisms fame) notes that roboticists fail to account for the myriad ways in which the wet parts of our brains — neurotransmitters, hormones, biological materials — contribute to consciousness. More fundamentally, she says, consciousness is a slippery concept. If someone were to claim that a robot was conscious, how would anybody verify it? “I really wish people would sit back from their fancy machines and think about this question,” she told me.

Point taken. But now back to those fancy machines.

Plenty of researchers argue that conscious robots are possible because, fundamentally, consciousness begins with a sense of what and where your body is.

For example, a few years ago, Olaf Blanke‘s team at the École Polytechnique Fédérale in Lausanne, Switzerland, made headlines for inducing out-of-body experiences in people. Wearing virtual reality goggles, volunteers saw live video footage of their own back. When researchers simultaneously stroked participants’ real back and the virtual one with a stick, most subjects had the distinct and jarring feeling of actually being inside the virtual body.

These findings contradict most philosophy textbooks, which regard our sense of self as the highest form of evolution. Instead, “We think that the type of body representation that generates where you are in space and what you consider as your body is a very primitive form of self,” Blanke says.

Ok, so then the question becomes, what would you need to go from a “primitive form of self” to human consciousness?

“The biggest challenge for embodied AI is to build a machine that cares about its actions and in some way, for that reason, enjoys a form of freedom,” notes Ezequiel Di Paolo, a computer scientist at University of the Basque Country in San Sebastian, Spain.

One way to do this, he says, might be to have these primitive robots interact with each other. The logic is that the integration of their various sensory feedback loops could lead to sophisticated forms of intelligence, much like human language evolved from living in groups.

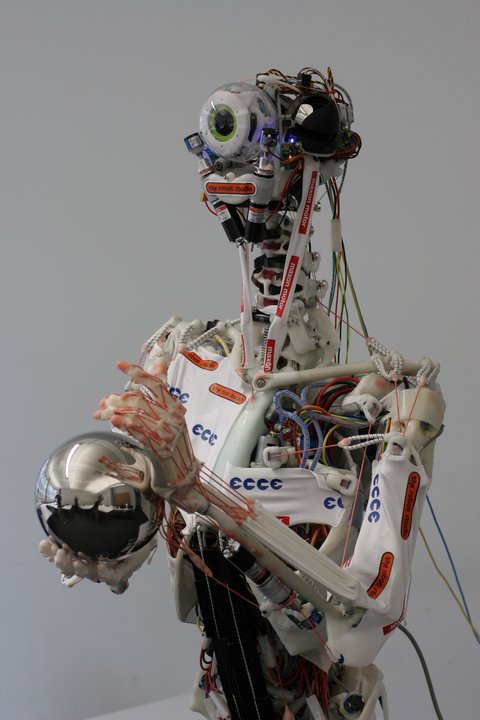

Others argue that to reach conscious awareness, embodied robots would need an extra boost of top-down programming. “In order to get to the top of the cognitive tree, a robot needs to have an internal model of itself,” says Owen Holland, professor of cognitive robotics at the University of Sussex, UK, and the creator of ECCEROBOT. That way, a robot could imagine what would happen if it performed a certain behavior, and make predictions about the best — and worst — actions to take. Our brains seem to do something similar: parts of the motor cortex activate when we simply imagine performing an action.

Conscious robots are eminently possible, Holland says. “At the moment, we’re still groping around three rungs from the floor. But if we get the right method, then it will just take time. Hopefully not billions of years.”

For the sake of this blog post, let’s consider a more realistic scenario: that in 10 or 20 or 50 years, we will have built a pre-conscious robot that responds to its surroundings, feels pain and pleasure, and has a primitive sense of self. Because these bots will inevitably be used for all of the nasty things we don’t like to do — fight in war zones, clean the house, pump gas — is it time to start thinking about how to protect them from us?

At the extreme end, philosopher Thomas Metzinger of Johannes Gutenberg University in Mainz, Germany, is arguing for an immediate moratorium on efforts to make robots with emotions until ethics are properly discussed. “We should not unnecessarily increase the amount of conscious suffering in the universe,” says Metzinger (who has been a vegetarian for 34 years).

The essential problem, he says, is that if a machine has the capacity to understand its own existence, then it has the capacity to suffer. And we don’t know how or when a robot with a simulated image of its own body and simulated gut feelings and goals will turn into an entity with self-awareness.

Metzinger is encouraged that more and more scientists are beginning to think seriously about these issues. For example, he has been funded to study ethical issues involved in the Virtual Embodiment and Robotic Re-Embodiment program, an €8 million project in which researchers from nine countries are making a system to allow a volunteer to mentally step into a virtual body and feel like its his own.

Conscious robots “are not coming tomorrow, or even the day after tomorrow,” Metzinger says. “But we should be extremely cautious.”

*

Cat image via Flickr

“And we don’t know how or when a robot with a simulated image of its own body and simulated gut feelings and goals will turn into an entity with self-awareness.”

In one (imagined) version of events, August 29, 1997—the date when Cyberdyne System’s Skynet became self-aware in the Terminator series.

I’m absolutely not trying to make light of the issues raised in this post, and I agree with you that bottom-up feedback would be absolutely necessary for the development of anything we would recognize as true “intelligence” or “self-consciousness” or “sentience”. But Herr Doktor Metzinger is right to say “we should be extremely cautious”. If sentient robot-beings do have the capacity for suffering, we should assume that they will also have the propensity of all living beings to defend themselves (body and soul). Maybe the premise of Terminator isn’t all that far-fetched.

The discussion of ethics reminds me of the Star Trek Next Generation episode, The Measure of a Man where Data is put on trial to determine whether he sentient. A scientist wants to take him a part to learn how to make more Datas. Data and his crew are opposed because that would kill him. It’s a thoughtful and poignant look at this very issue, even for a machine without emotions as Data is at this point int he series.

The most ominous word in this scary post is Owen Holland’s use, in your quote, of “hopefully”. Why exactly do we need these things?

Deep Blue was a project that showed that computers could compete with the best humans at chess. But chess is a narrow problem, compared with getting out of bed in the morning.

Watson was a project that showed that computers could compete with very good humans at a problem that humans are generally good at. It should be noted, however, that Watson neither read the clue, nor heard it – a human typed it in. Watson would not have done so well without that aid.

Both projects tossed tons of hardware and software at the problems. Both projects were milestones demonstrating kinds of intelligence.

I recently coded a Sudoku solver for projecteuler.net (an educational site). There are 81 cells, each of which can be one of nine numbers. 9^81 is something like 10^77, and searching a space that large would take every computer ever built more than the age of the Universe. Each puzzle comes with some of the cells defined. This reduces the search. There are also rules (not many) which can reduce the number of symbols possible for the remaining cells. In my solver, using just a single logic algorithm solved most of the required puzzles. Five such algorithms solved all but a few. One more algorithm would have solved them all, but i opted to have the program guess at that point. After making a guess, it would see if it could solve it using the existing algorithms. The resulting program solves puzzles in under a millisecond.

And that’s why researchers code solutions to problems into their robots. It’s because current hardware isn’t nearly quick enough. So, if i were coding AI, my robots would have many, many precoded skills. And, they’d be quirky. They might take seconds to figure out a visual scene, but be able to do absurd amounts of math in a second.

There are people who are working the problem. In addition to precoded skills, the robot needs to be able to learn. That might mean stochastic (statistical learning – we do it), genetic algorithms for solution space searching, neural net learning, and maybe other ideas. But the idea that there’s some special magic that brains do that we’ll never figure out is silly.

Of late, computers aren’t getting faster with Moore’s law anymore. It’s a speed bump. It’s still exponential speedup. It will take an increasing amount of clever software to make progress. We are getting there. When will we have artificial human style intelligence? Who knows. But expect to see more milestones.

Tim asks why we need such things? Perhaps “need” is too strong a word. In the Darwinian sense, homo sapiens doesn’t need anything except perhaps a solution to over population. Skynet would certainly aid there.

Investors have bought computer systems to “play the market”. Programs have been written to do medical diagnosis. There are people who will be sold on the idea that there is an advantage. It looks inevitable.

I have no problem with the concept of ever more sophisticated models of the physical universe, within which I include both the geophysical (let’s nail down climate change) and the metaphysical (let’s try to understand the extremes of behaviour in various spheres). I’m sure it’s useful to apply all the powers of deductive and inductive logic to explore, and possibly correct, the errors that arise from our shortfall of predictive capability in all that.

But I start to struggle in two areas, neatly encapsulated in Virginia’s post. Firstly, autonomy. If we allow self-awareness to machines, then we have inevitably relinquished control – is that what we want? And secondly, to use the well chosen word from the title: soul. Can we be sure that autonomous self-aware machines will evolve an ethical and (dare I say) spiritual framework that we, their only begetters, will be content to live within?

Right, I’m off to read a few Philip K. Dick novels.

To reply to Susan Greenfield “I really wish people would sit back from their fancy machines and think about this question”, we do step out of our machines. When designing the MoNETA virtual and robotic animat (http://nl.bu.edu/research/projects/moneta/), the question of consciousness, qualia, what it means to have neurons coding for pain, pleasure, “redness”, etc, pop out continuously. Here is a post done by a few of us on the topic:

http://www.neurdon.com/2010/12/07/moneta_and_the_c_word/